The release of ChatGPT, a text-generating AI, has stoked fears about academic dishonesty at Loyola. Some professors have taken the issue head on with revised assignments and ethical discussions.

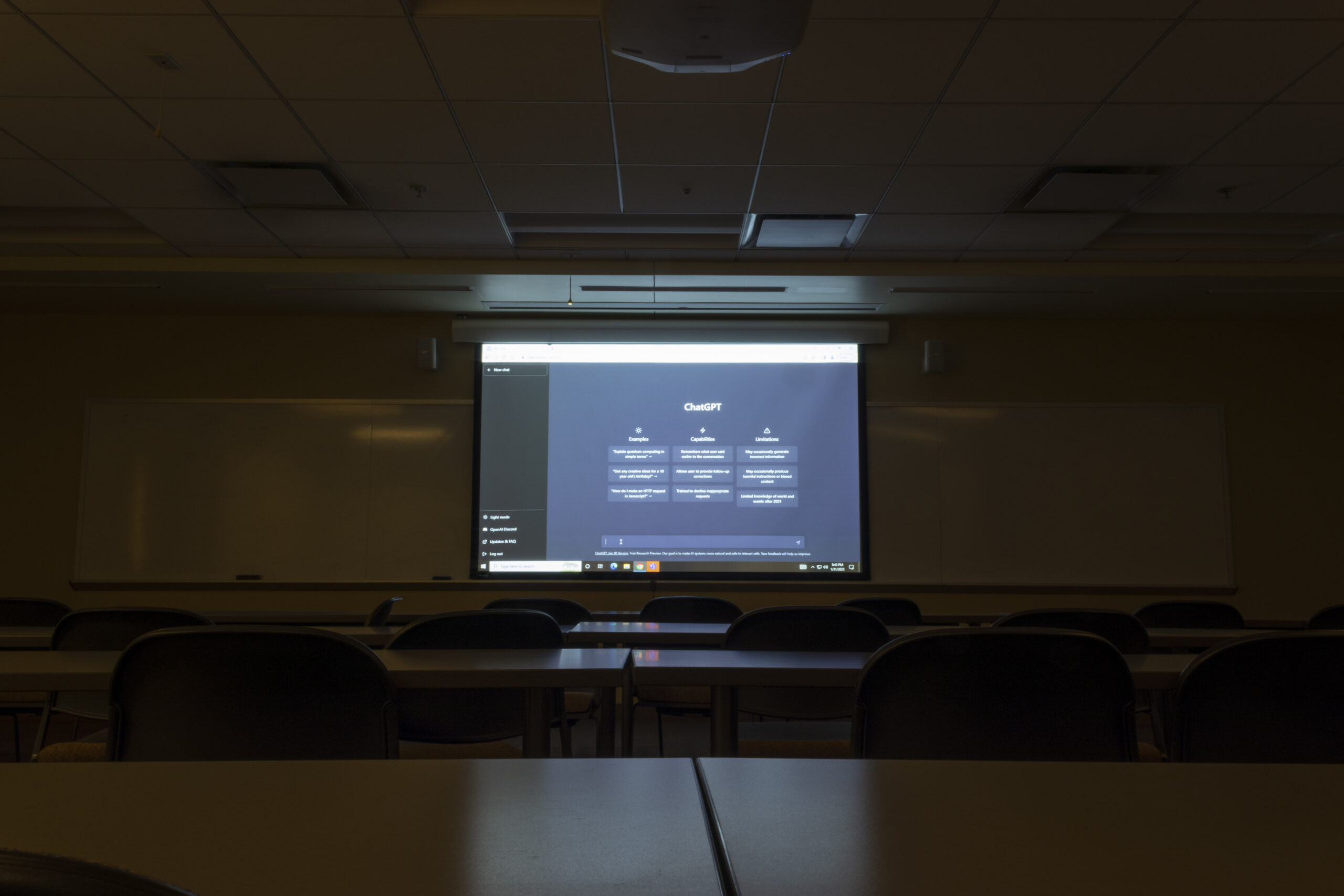

Late last year, professors, students and administrators were faced with the release of ChatGPT, an artificial intelligence (AI) software that takes user prompts and writes a response in minutes.

The AI can create poems, song lyrics and — most concerning for schools — entire essays. Using online data archives, developers trained ChatGPT on the collective knowledge of the Internet, according to Dr. Michael Burns, an assistant professor of biology at Loyola. OpenAI, the company that developed ChatGPT, researches and creates AI systems that are released to the public, according to their website.

Three Loyola professors addressed fears of academic dishonesty with a session at this semester’s Focus on Teaching and Learning, a virtual professional development conference, on Jan. 12.

Associate Director of the Center for Engaged Learning, Teaching and Scholarship Susan Haarman, Associate Professor Dr. Joe Vukov of the philosophy department and Burns said ChatGPT should be regarded as a new tool, not an instant cheating button. As attending professors listened, the PowerPoint detailed ways to incorporate AI software into the classroom.

Vukov suggested ChatGPT could be used as an aid for creating syllabi, lesson plans and even letters of recommendation. The AI program can spark discussions about its training data, usage and limitations. However, Vukov said the new software struggles to address questions related to ethics and often avoids making any hard moral stances. Burns said this aspect was intentionally designed by the developers.

“The AI is giving kind of wishy-washy answers, where it’s taking a hard centrist approach on a lot of these things,” Burns said at the conference.

Burns said this approach to ethics was programmed in to keep ChatGPT’s responses inoffensive.

For professors concerned about academic dishonesty, Burns said there is software that can detect AI-generated text. GPTZero analyzes text and determines whether it was written by a human or ChatGPT. However, Burns said the program isn’t perfect and may give incorrect results. Burns said OpenAI is developing their own AI detector.

Haarman assured professors that ChatGPT isn’t the end of education. The program is limited in matters of accuracy, personal experience and current events. She suggests that class assignments incorporate these elements to persuade students against using the AI software.

“The bots and AI cannot replicate their own opinions and their own voice,” Haarman said at the conference. “What you are and what you see cannot be found in an algorithm.”

With the start of the new semester, several professors have embraced text-generating programs. Dr. Kristen Irwin, assistant professor of philosophy in the Honors Program, plans on incorporating ChatGPT into her discussion section later in the semester.

In a game she calls “Break the Bot,” students will use the course’s texts to write prompts the AI can’t make sense of, making it spit out gibberish.

Irwin also said she noticed how formulaic ChatGPT’s essays are. She said professors in the Honors Program want to read creative essays that break away from the five-paragraph format.

At the same time, Irwin has several concerns about the information ChatGPT draws from. With the entire Internet used as its training data, the AI has been exposed to the worst things people have said to each other, she said.

“It’s important to know what its limitations are,” Irwin said. “Its training data is racist, sexist and homophobic. It spits out very boilerplate essays and most problematically, it hinders people from finding their own voice.”

Dr. Michael Schumacher, a lecturer in the Department of Political Science, also discussed ChatGPT on the first day of classes. He teaches three sections of Political Theory. With at least 40 students in each class all reading ancient texts, Schumacher says he encounters academic dishonesty most often in this course.

When talking about the AI, Schumacher treated it like any other tool that could be used to cheat. He compared it to SparkNotes, a popular website that provides study guides for courses in literature, history and other subjects.

“I understand the tools that are out there,” Schumacher said. “I can’t prevent you from using them. It is your responsibility as individuals to use them just as that — tools.”

Schumacher said he encourages his students to use these resources only as study tools and not as replacements for their own work.

Students like Madison Passon, a first year psychology major, didn’t hear about ChatGPT until her honors professors brought it up. Passon said every time she’s tried to access it since, the site has been at capacity. She said she hopes students don’t try to use it.

Kamdyn Rhodes, a senior criminal justice major, says there are plenty of applications for ChatGPT outside of academics. She said it could potentially be used to help fill out government applications or write letters to legislators. In school, though, Rhodes said it has more cons than pros.

“It can stifle learning if you have a computer write everything for you,” Rhodes said.

Featured image by Holden Green